Machine learning is no longer just a research topic or a buzzword in tech circles. It’s powering search engines, fraud detection, recommendation systems, medical diagnostics, and much more, often in ways we don’t even notice.

In this guide, we’ll walk through what machine learning actually is, how it differs from traditional programming, the main types and algorithms, and how real-world projects come together. Our goal is to give you a clear, practical understanding of why machine learning matters, and how it can be applied to solve real problems and create value.

Get in touch to discuss your project needs:

Reviews from our happy clients:

Proudly supporting clients of all sizes to succeed through digital solutions

Why work with us?

What Machine Learning Is And How It Differs From Traditional Programming

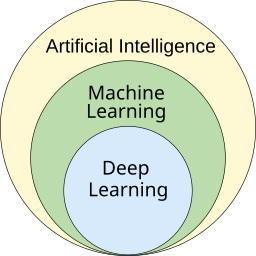

Image credit: Lollixzc, CC BY-SA 4.0, via Wikimedia Commons

At its core, machine learning (ML) is a way of getting computers to learn patterns from data and make decisions or predictions without being explicitly programmed for every possible situation.

In traditional programming, we write explicit rules:

- Input + Rules → Output

For example, if we want to flag suspicious transactions, we might hand-code rules like: “if amount > $5,000 and country is X, flag as risky”. A developer manually thinks through every condition.

With machine learning, the process is flipped:

- Input + Examples (Data) → Algorithm learns the Rules → Output

Instead of writing rules ourselves, we give the algorithm many examples of past transactions labeled as “fraud” or “not fraud”. The algorithm learns patterns from those examples and creates its own internal rules. Then it uses those rules to make predictions on new data.

So, the key differences are:

- Learning from data instead of hand-coded logic

- Adapting over time as new data comes in

- Handling complexity that’s too hard to describe with simple if/else rules

That’s why machine learning has become central to modern AI solutions, from predictive modelling and data-driven automation to advanced recommendation engines and classification systems.

Core Types Of Machine Learning

There are three core families of machine learning we work with most often: supervised, unsupervised, and reinforcement learning.

Supervised Learning: Teaching Machines With Labeled Examples

Supervised learning is used when we have historical data with known outcomes, called labels. We train a model to map inputs to those labels.

Common supervised learning tasks include:

- Classification – Predicting a discrete category (spam vs not spam, churn vs no churn, fraud vs not fraud)

- Regression – Predicting a continuous value (house price, demand next week, lifetime value of a customer)

We use supervised learning for many practical predictive modelling problems, such as:

- Forecasting sales or demand

- Predicting customer churn

- Estimating risk scores in finance or insurance

Unsupervised Learning: Finding Hidden Patterns In Data

Unsupervised learning works without labels. Instead, models look for structure and patterns in the data itself.

Useful unsupervised tasks include:

- Clustering – Grouping similar items (customers, products, behaviours) together

- Dimensionality reduction – Compressing data into fewer features while preserving structure

We use unsupervised learning to:

- Segment customers for marketing or personalisation

- Detect unusual patterns or anomalies in logs or transactions

- Explore new datasets when we don’t yet know what to look for

Reinforcement Learning: Learning Through Trial And Error

Reinforcement learning (RL) is inspired by how we learn through trial and error. An agent interacts with an environment, takes actions, and receives rewards or penalties. Over time, it learns a strategy (policy) that maximises long-term reward.

RL is used for problems like:

- Game-playing agents (chess, Go, video games)

- Robotics and control systems

- Dynamic pricing or real-time decision-making

While RL is powerful, it’s typically used in more specialised settings compared with supervised and unsupervised learning, which power most business-focused AI solutions for now.

Key Concepts And Building Blocks Of Machine Learning

Regardless of the type of ML, most projects share the same core ingredients.

Data, Features, And Labels

Data is the raw material. It might be transaction histories, sensor readings, images, text, or user interactions.

From this data, we engineer features, the meaningful attributes the model uses. For example:

- For credit risk: income, repayment history, account age

- For product recommendations: past purchases, browsing history, product categories

If it’s a supervised learning problem, we also have labels:

- Did the customer churn? (yes/no)

- What was the actual sale amount?

Good features and clean, representative data usually matter more than using the latest, fanciest algorithm.

Training, Validation, And Testing

We usually split our dataset into three parts:

- Training set – Used to fit the model

- Validation set – Used to tune hyperparameters and compare approaches

- Test set – Held back until the end to estimate how the model will perform on truly unseen data

This separation helps us avoid fooling ourselves. A model that performs well on training data but poorly on new data isn’t useful in practice.

Overfitting, Underfitting, And Model Generalization

Overfitting happens when a model memorises the training data instead of learning general patterns. It looks great in development but fails in production.

Underfitting is the opposite: the model is too simple to capture the true relationships in the data, leading to poor performance everywhere.

We’re always aiming for good generalisation: strong performance on new, unseen data. We improve generalisation with techniques like:

- Cross-validation

- Regularisation

- Early stopping

- Gathering more or better-quality data

Common Machine Learning Algorithms And What They Are Used For

There are many algorithms in machine learning, but a few families appear again and again in real projects.

Linear Models And Decision Trees

Linear models (like linear regression and logistic regression) are simple, fast, and surprisingly strong baselines.

We use them when:

- We need interpretability (easy-to-explain coefficients)

- Relationships are roughly linear or we can engineer features to make them so

Decision trees split data based on feature values, forming a tree of decisions. They’re:

- Intuitive to visualise and explain

- Able to capture non-linear relationships

They’re common for tasks like credit scoring, risk assessment, or basic classification problems.

Ensemble Methods And Random Forests

Ensemble methods combine multiple models to create a stronger overall predictor.

Popular ensemble methods include:

- Random forests – Many decision trees trained on different subsets of the data and features

- Gradient boosting machines (GBM) – Trees built sequentially, each trying to correct the errors of the previous ones

These are often our go-to for tabular business data (transactions, users, products) because they:

- Handle mixed data types well

- Capture complex interactions

- Deliver strong accuracy with relatively low tuning effort

Neural Networks And Deep Learning

Neural networks are inspired by the structure of the brain, consisting of layers of interconnected nodes (neurons). Deep learning refers to neural networks with many layers.

They dominate in areas like:

- Computer vision – Image classification, object detection, medical imaging

- Natural language processing (NLP) – Chatbots, sentiment analysis, document classification

- Speech and audio – Voice recognition, speech-to-text

Deep learning powers many of the most advanced applied artificial intelligence systems we see today, especially when we have very large datasets.

Real-World Applications Of Machine Learning

Machine learning shows up in far more places than we often realise.

Everyday Consumer Uses

We interact with ML daily, sometimes without noticing:

- Recommendation systems on streaming platforms and online stores

- Email spam filters that keep inboxes usable

- Personalised feeds on social media and news sites

- Navigation apps using live traffic prediction

All of these rely on custom machine learning models tuned to user behaviour and context.

Business And Industry Use Cases

In organisations, ML is increasingly central to AI solutions for business, improving efficiency and decision-making.

Examples include:

- Predictive analytics and forecasting – Sales, inventory, demand, and capacity planning

- Data-driven automation – Intelligent routing of support tickets, automated document processing, invoice matching

- Fraud and anomaly detection – Flagging unusual behaviour in finance, e-commerce, or cybersecurity

- Recommendation and personalisation – For e-commerce, SaaS products, and media platforms

- Process optimisation – Identifying bottlenecks in operations, logistics, and supply chains

These systems depend on solid ML engineering, robust data pipelines, and the right AI strategy and implementation to deliver measurable ROI.

Scientific And Social Impact

Beyond business, machine learning drives breakthroughs in:

- Healthcare – Early disease detection from medical images and patient data

- Climate science – Modelling weather patterns and climate change impacts

- Social sciences – Analysing large-scale survey and behavioural data

In each case, thoughtful data analytics and modelling enable insights that would be impossible to obtain manually.

How A Basic Machine Learning Project Works In Practice

While every project is unique, most follow a similar end-to-end lifecycle.

Defining The Problem And Collecting Data

We start by clarifying the business question and success metrics:

- What decision are we trying to improve?

- What does a “good” prediction look like?

- How will we measure impact (revenue, cost savings, time saved, accuracy)?

Then we identify and assemble the right data sources:

- Internal systems (CRM, ERP, analytics platforms)

- Third-party data (market data, demographics)

- Logs and event streams (user actions, transactions)

This is where we decide if we need custom machine learning models or if simpler analytics will do.

Training, Evaluating, And Improving The Model

Once the data is prepared, we:

- Engineer features that capture the signal we care about

- Select algorithms appropriate for the problem (e.g., random forest for tabular data, neural networks for images/text)

- Train models on historical data

- Evaluate performance against baseline metrics and simple heuristics

We iterate on this loop, tuning hyperparameters, trying different models, and refining features, until we achieve reliable performance and stable generalisation.

Deploying And Monitoring In The Real World

A strong model in a notebook isn’t enough. To create value, we need deployment and monitoring:

- Deploy the model into production systems (APIs, dashboards, batch jobs)

- Integrate it into workflows where people or systems use its predictions

- Monitor performance over time, watching for data drift or changing patterns

- Retrain periodically as new data arrives

This full lifecycle is what turns machine learning development into robust, ongoing AI solutions for business, rather than one-off experiments.

Challenges, Risks, And The Future Of Machine Learning

Machine learning is powerful, but it’s not magic. There are meaningful challenges and responsibilities that come with using it.

Bias, Fairness, And Privacy Concerns

ML models learn from historical data, which can contain biases. If we don’t actively check and correct for this, models can:

- Perpetuate existing inequalities

- Treat certain groups unfairly

We also need to protect user privacy, especially when handling sensitive data. That means:

- Minimising data collection where possible

- Applying security best practices

- Complying with relevant regulations and policies

Scalability, Interpretability, And Regulation

As models are deployed more widely:

- Scalability becomes key, systems must handle large volumes of data and predictions reliably

- Interpretability matters, stakeholders need to understand why a model made a decision, especially in regulated sectors like finance or healthcare

- Regulation is evolving, governments and organisations are developing frameworks for responsible AI use

Balancing accuracy, transparency, and compliance is now a core part of ML engineering.

Trends Shaping The Next Wave Of Machine Learning

We’re seeing several important trends shape the future of ML:

- Foundation and large language models powering more capable NLP systems

- MLOps practices bringing software engineering rigour to ML workflows

- AutoML tools lowering the barrier to entry for non-experts

- Edge and on-device models enabling real-time intelligence without constant cloud connectivity

Together, these shifts are making machine learning more accessible, more powerful, and more deeply integrated into everyday products and services.

Conclusion

Machine learning has moved from research labs into the core of how modern organisations operate. By learning patterns from data instead of relying on rigid rules, ML allows us to automate complex decisions, uncover hidden insights, and scale smarter.

When we understand the core types of ML, the key concepts like training and generalisation, and the common algorithms and applications, we’re better equipped to spot real opportunities, and to avoid the hype.

Whether we’re building predictive models, powering recommendation systems, automating processes, or exploring new data, machine learning gives us a flexible toolkit for turning information into impact. The organisations that learn to use it thoughtfully and responsibly will be the ones that stay ahead as the data-driven future continues to unfold.

Get in touch to discuss your project needs:

Key Takeaways

- Machine learning shifts from hand-coded rules to data-driven models that learn patterns and make predictions, enabling more flexible and scalable decision-making.

- The three main types of machine learning—supervised, unsupervised, and reinforcement learning—cover labeled prediction tasks, pattern discovery, and trial-and-error decision strategies.

- Successful machine learning projects rely more on high-quality data, meaningful features, and robust evaluation (training/validation/testing) than on using the most complex algorithms.

- Common machine learning algorithms like linear models, decision trees, ensembles, and deep neural networks power applications from spam filters and recommendations to medical imaging and forecasting.

- Deploying machine learning responsibly requires monitoring for bias, ensuring privacy and interpretability, and using MLOps practices to keep models accurate, compliant, and aligned with real-world change.

Frequently Asked Questions About Machine Learning

What is machine learning and how does it differ from traditional programming?

Machine learning is a way for computers to learn patterns from data and make predictions without being explicitly programmed for every scenario. In traditional programming, developers hand-code rules. In machine learning, algorithms learn those rules from labeled examples, adapt over time, and handle complex relationships that are hard to express with simple logic.

What are the main types of machine learning and when are they used?

The three core types are supervised, unsupervised, and reinforcement learning. Supervised learning uses labeled data for tasks like classification and regression. Unsupervised learning finds patterns via clustering or dimensionality reduction. Reinforcement learning trains an agent through rewards and penalties for applications such as games, robotics, and dynamic decision-making.

How is machine learning used in real-world business applications?

Machine learning powers predictive analytics, forecasting, and recommendation systems in many industries. Businesses use it to predict sales and demand, detect fraud and anomalies, automate document processing and ticket routing, personalize user experiences, and optimize logistics and operations. These applications rely on robust data pipelines, ML engineering, and ongoing monitoring in production.

What are the biggest challenges and risks when deploying machine learning models?

Key challenges include data bias and fairness, where models may reinforce historical inequalities; privacy concerns around sensitive information; and the need for scalability and interpretability. Organizations must comply with evolving AI regulations, ensure stakeholders understand model decisions, secure data, and monitor performance over time to handle drift and changing conditions.

Related content:

Custom Software Australia & International

AI Chatbot Development Services

![logo-new-23[1] logo-new-23[1]](https://agrtech.com.au/wp-content/uploads/elementor/thumbs/logo-new-231-qad2sqbr9f0wlvza81xod18hkirbk9apc0elfhpco4.png)