If you’ve heard “CRO” tossed around and wondered what it actually means for your business, you’re not alone. We see teams mix up acronyms, chase vanity metrics, and leave easy wins on the table. Here’s the short version: if your site already gets traffic, conversion rate optimisation (CRO) is the most cost‑effective way to grow revenue. We’ll explain the different meanings of CRO, why it matters in marketing, and how we at AGR Technology run a structured, ethical optimisation program that delivers measurable uplift. If you want help, we’re ready to jump in with a practical roadmap and hands‑on testing.

Need advice fast? Book a free CRO consult with AGR Technology and get an action list for your business.

Definitions – What CRO Means

Conversion Rate Optimization

In marketing and product, CRO stands for conversion rate optimisation, the practice of improving the percentage of users who complete a desired action: purchase, lead submission, free trial, demo request, app install, or any micro‑conversion such as add‑to‑cart or email signup. It’s a mix of research, UX, analytics, and experimentation (A/B and multivariate testing) aimed at lifting revenue per visitor without spending more on ads.

CRO In Marketing: Why It Matters

Traffic is getting pricier. Privacy changes reduce targeting precision. Yet most websites still leak conversions due to friction, unclear messaging, slow load times, or trust gaps. CRO plugs those leaks.

Here’s why it matters:

- Higher ROI on existing traffic: Lift conversion rate and average order value (AOV) without increasing media spend.

- Compounding gains: Small uplifts across the funnel (e.g., +8% add‑to‑cart, +5% checkout completion) stack into meaningful revenue.

- Decision quality: Testing replaces guesswork with evidence. Stakeholders align faster when numbers speak.

- Customer experience: Better UX and accessibility improve satisfaction and reduce support load.

Where CRO shines:

- Ecommerce (Shopify, WooCommerce, BigCommerce, Magento): Checkout friction, shipping clarity, payment trust, merchandising, search and filtering.

- B2B lead gen: Form optimisation, social proof, pricing clarity, demo scheduling, qualification.

- SaaS: Onboarding, paywall and pricing page framing, payback messaging, trial‑to‑paid conversion.

At AGR Technology, we build programs that respect brand, compliance, and accessibility while targeting measurable uplift in conversion and revenue per visitor.

How CRO Works: A Practical Process

Research And Insight Gathering

We start with a clear picture of user behaviour and friction points:

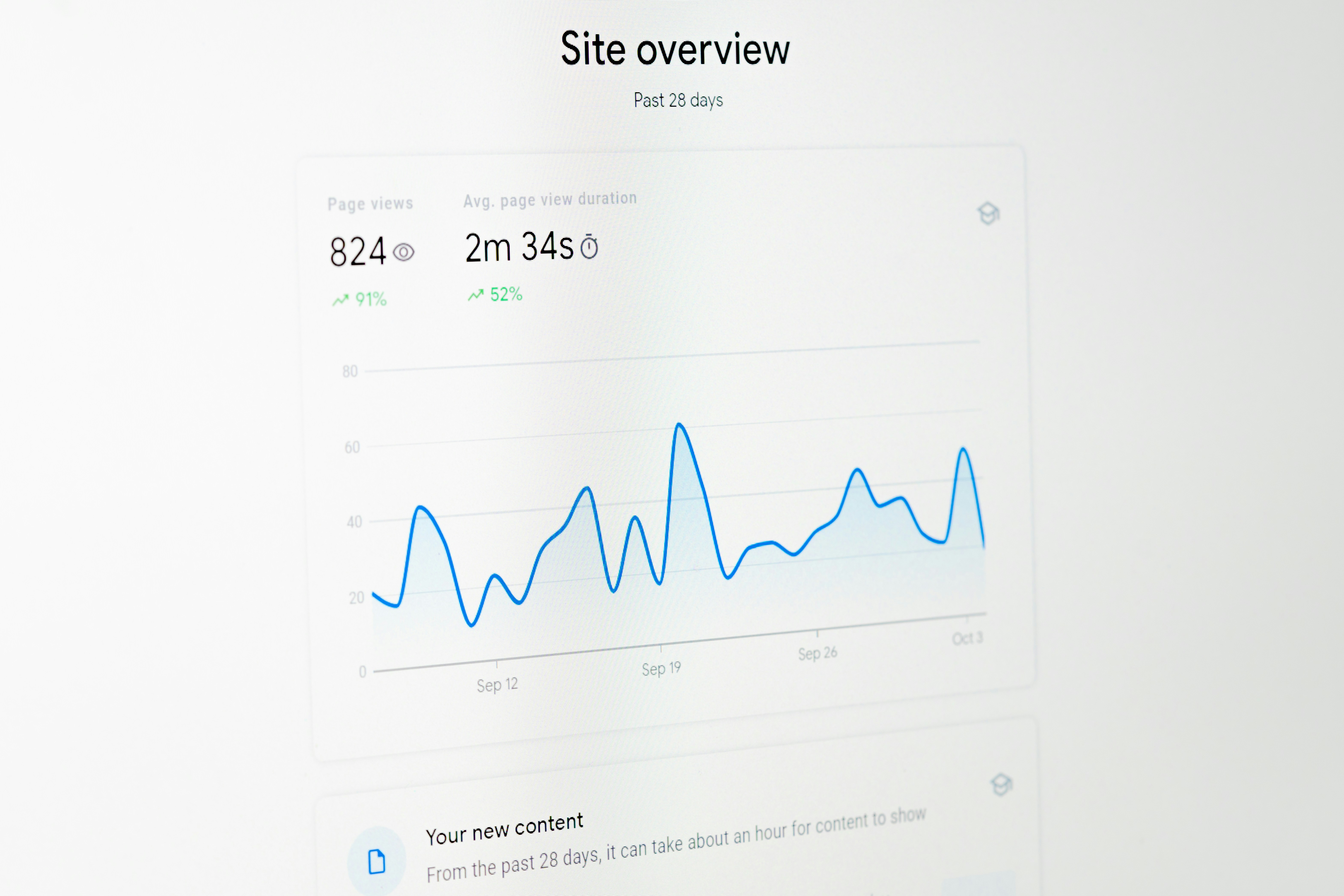

- Analytics audit: Ensure GA4, Google Tag Manager, and events are accurate. No good test runs on bad data.

- Quant analysis: Funnel analysis, segmentation (device, channel, geo), cohort trends, bounce/exit diagnostics, page speed.

- Qual research: Heuristic reviews, UX best‑practice checks, heatmaps and scroll depth, session recordings, on‑page polls, customer interviews, chat logs, and support tickets.

- Technical checks: Core Web Vitals, accessibility (WCAG), form validation, tracking reliability, and SEO conflicts.

Deliverable: A prioritised list of issues and opportunities mapped to business goals.

Hypothesis And Prioritization

Every experiment gets a crisp hypothesis grounded in evidence. We use an ICE/PIE‑style scoring (Impact, Confidence, Effort) plus statistical feasibility (sample size and minimum detectable effect) to plan a test roadmap. High‑impact, low‑effort tests go first: complex builds are sequenced to avoid clashes.

Examples we commonly test:

- Value proposition clarity above the fold

- Social proof placement and strength (ratings, reviews, logos, case studies)

- Navigation and search usability

- Form fields and error handling (progressive profiling, inline validation)

- Checkout trust elements (payment icons, delivery times, returns policy)

- Pricing page hierarchy, plan naming, and comparison tables

- Personalisation by device, lifecycle stage, or referrer

Experimentation And Iteration

We use controlled experiments to validate changes:

- A/B/n and multivariate testing with guardrails for significance and power

- Feature flags for safe rollouts

- Clear success metrics (primary and guardrail metrics such as add‑to‑cart and refund rate)

- Post‑test analysis including segment checks and QA

Winning variants are shipped. Losers teach us something, and that learning feeds the next hypothesis. We maintain a living knowledge base so you don’t re‑test the same ideas next quarter.

Core Metrics To Track

Conversion Rate And Baselines

Define the primary conversion (purchase, lead, trial) and the key micro‑conversions (product view, add‑to‑cart, checkout start). Establish baselines by device and channel, mobile vs desktop often behave very differently. Seasonality and promo periods matter: we normalise where needed.

Revenue Per Visitor And AOV

For ecommerce, revenue per visitor (RPV) blends conversion rate and AOV, giving a single metric that captures both propensity to buy and how much they spend. We also watch:

- AOV and items per order

- Checkout completion rate by step

- Discount dependency and margin impact

- Customer lifetime value (where data is available)

For B2B/SaaS, we align with pipeline and payback: MQL to SQL conversion, demo‑to‑close, trial‑to‑paid, and churn.

Significance, Power, And Minimum Detectable Effect

We plan tests with statistics in mind:

- Significance (alpha): Risk of a false positive: commonly 5%.

- Power (1‑beta): Probability of detecting a real effect: we aim for 80–90%.

- Minimum Detectable Effect (MDE): The smallest improvement worth detecting. Lower MDE needs more sample.

We calculate required sample sizes upfront, set test durations to cover full business cycles (typically 1–3 weeks, avoiding holidays if behaviour skews), and lock the analysis plan before launch.

Tools And Techniques

Research And Analytics Stack

- GA4 and Google Tag Manager for event tracking, funnels, and segments

- Server‑side tagging where privacy and data quality matter

- Heatmaps and session recordings (e.g., Hotjar, Clarity) for qualitative insight

- Form analytics to identify drop‑off by field and validation error

- Page speed and Core Web Vitals (Lighthouse, PageSpeed Insights)

- Accessibility checks against WCAG 2.2

- Survey/poll tools to capture objections, tasks, and intent in users’ words

Testing And Personalization Platforms

We work with Optimizely, VWO, Convert, and native frameworks. Google Optimize sunsetted in 2023: we migrate clients to supported platforms with proper QA and performance controls. For personalisation, we use lightweight rules or CDP‑driven segments where it adds value without creepiness.

Our team handles experiment design, build, QA (including flicker/FOUC checks), and post‑test analysis. We integrate with your stack, Shopify, WordPress, headless, or custom apps, without slowing the site.

Common Pitfalls And Best Practices

Avoiding Peeking And Sample Size Mistakes

Stopping a test the moment it looks like a winner (peeking) inflates false positives. We avoid this by:

- Pre‑defining sample size and duration

- Monitoring for anomalies, not outcomes

- Using sequential or Bayesian methods only with proper thresholds

We also keep test velocity realistic, fewer, well‑run tests beat a pile of inconclusive ones.

Balancing UX, Ethics, And Accessibility

CRO isn’t about tricking users. It’s about clarity and ease. Our standards:

- Honest messaging, no dark patterns

- Accessibility as a non‑negotiable (colour contrast, keyboard nav, ARIA labels)

- Respect for privacy and consent preferences

- Performance‑first builds to protect Core Web Vitals

Best practice quick wins we often carry out:

- Clear, specific CTAs (“Start free 14‑day trial”, “Get pricing”), not vague “Submit”

- Inline validation and fewer required form fields

- Delivery times and returns policy visible before checkout

- Prominent trust signals: reviews, guarantees, secure payment badges

- Mobile‑first layouts with fast, tappable UI elements

Conclusion

CRO can mean a few things, but for your marketing, it’s the engine that helps existing traffic convert better. With a structured program, research, prioritised hypotheses, disciplined experimentation, and tight analytics, you can lift conversion rate, AOV, and revenue per visitor without upping ad spend.

How we can help at AGR Technology:

- CRO audit and roadmap within 2–3 weeks

- End‑to‑end A/B testing and personalisation

- Analytics and tag governance in GA4 and GTM

- UX, accessibility, and performance improvements that stick

Ready to improve your site’s conversion rate? Book a free CRO consult with AGR Technology. We’ll review your data, highlight the biggest wins, and map out a comprehensive plan

Frequently Asked Questions about CRO

What is CRO in marketing?

If you’re wondering what is CRO, it stands for conversion rate optimization—the process of increasing the percentage of visitors who take a desired action, like a purchase, demo request, or signup. It blends research, UX, analytics, and A/B testing to lift revenue per visitor without buying more traffic.

How does a CRO program work from start to finish?

A robust CRO program starts with analytics and UX research to find friction, then forms evidence‑based hypotheses prioritized by impact, confidence, and effort. Teams run controlled A/B or multivariate tests, track primary and guardrail metrics, ship winners, document learnings, and iterate for compounding gains.

Which metrics matter most in CRO?

Key metrics include primary conversion rate (purchase, lead, trial) and micro‑conversions (product views, add‑to‑cart, checkout start). For ecommerce, watch revenue per visitor and average order value. Plan tests with significance, power, and minimum detectable effect, and baseline results by device, channel, and seasonality.

Is CRO the same as SEO?

No. SEO attracts qualified traffic from search engines, while CRO turns existing traffic into more conversions and revenue. They’re complementary: faster pages, better UX, and clearer messaging from CRO can support SEO, but CRO focuses on on‑site behavior, testing, and conversion efficiency rather than rankings.

How long does CRO take to show results?

Timelines depend on traffic and the minimum detectable effect. A well‑planned A/B test often runs 1–3 weeks to cover full cycles. Many teams see first validated wins within 4–8 weeks, while sustained programs compound results over quarters as insights inform higher‑impact experiments and site improvements.

Related resources:

eCommerce Marketplace Development

Alessio Rigoli is the founder of AGR Technology and got his start working in the IT space originally in Education and then in the private sector helping businesses in various industries. Alessio maintains the blog and is interested in a number of different topics emerging and current such as Digital marketing, Software development, Cryptocurrency/Blockchain, Cyber security, Linux and more.

Alessio Rigoli, AGR Technology

![logo-new-23[1] logo-new-23[1]](https://agrtech.com.au/wp-content/uploads/elementor/thumbs/logo-new-231-qad2sqbr9f0wlvza81xod18hkirbk9apc0elfhpco4.png)